Deep dive into gRPC.

gRPC, HTTP/2 and the alternatives.

Hello subscribers! Thank you so much for taking the time to read my first article.

I'm someone who usually enjoys dabbling in various open source projects, and I decided to put my hobby together in writing in hopes that it might be of some use to others.

So let's get started with our first topic, gRPC.

1. Introduction

1) What is RPC?

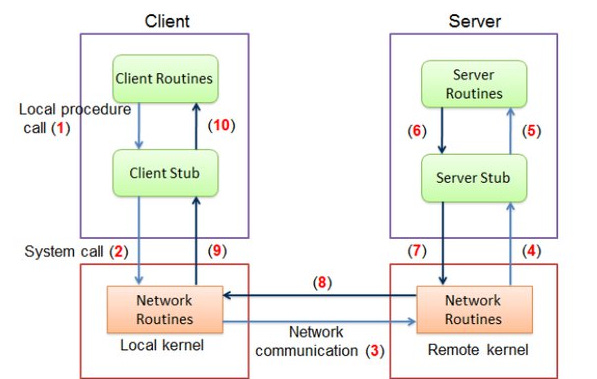

Remote Procedure Call (RPC) is a communication method that allows you to use a program or service located on another computer over a network as if you were calling it locally. In simple terms, RPC allows one system to remotely call a function or procedure on another system, with the necessary parameters being sent over the network. The core idea behind RPC is to allow developers to use remote services as if they were calling functions within the same memory space, without having to deal with the network directly. This makes it easier for developers to implement communication between services in distributed systems or microservice architectures.

2) What is gRPC?

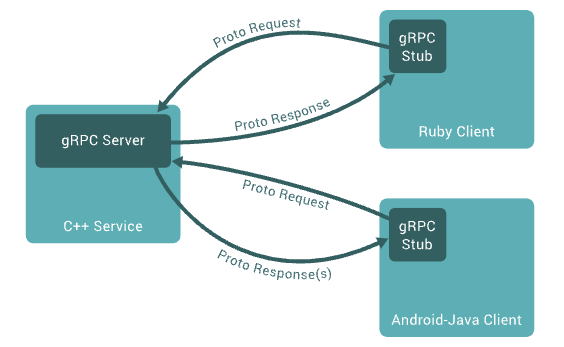

gRPC is an open-source RPC framework developed by Google. gRPC is based on a serialization mechanism called protocol buffers and the HTTP/2 protocol. We'll cover protocol buffers and the HTTP/2 protocol in more detail later. gRPC is widely used for service-to-service communication in microservice architectures and is characterized by high performance, simple integration, automatic code generation, and support for many languages.

3) History and Background

gRPC was born in 2015 when Google open-sourced an internal project called Stubby. Google had long used Stubby for internal service-to-service communication, and as more and more services adopted microservice architectures, they began to adopt gRPC for internal microservice communication.

The emergence of gRPC was driven by the proliferation of microservice architectures and the need for an efficient way to communicate between services. Traditional REST APIs struggled to handle large volumes of traffic due to the limitations of the HTTP 1.1 protocol. (We'll talk more about the limitations of HTTP 1.1 later.) Google developed gRPC based on the HTTP/2 protocol to provide higher performance and efficiency.

2. Core Concept of gRPC - Protocol Buffer

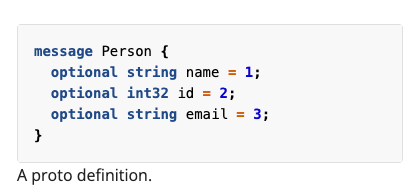

Protocol Buffers, or Protobuf for short, is a language-neutral and platform-neutral serialization framework developed by Google. Protobuf defines a structured data format used to serialize and deserialize data, and can serialize data more compactly and quickly than XML or JSON. Developers define the message format by writing a .proto file, and based on that, code generators for various programming languages can automatically generate data structures. This approach maximizes the efficiency of data exchange and provides high performance while minimizing network bandwidth usage.

3. Core Concept of gRPC - HTTP/2

1) Limitations of HTTP/1.1

HTTP/1.1 has long been the foundation of web communications, but it has some significant limitations that make it difficult to keep up with modern Internet conditions and performance requirements.

Head-of-Line Blocking

HTTP/1.1 can only process one request-response at a time. If the previous request is delayed, all subsequent requests will be delayed as well. This phenomenon is called head-of-line blocking, and it's been a major source of performance degradation.

Utilizing inefficient TCP connections

HTTP/1.1 required a separate TCP connection to be established for each request. This creates a lot of overhead for TCP connections and teardowns when there are a lot of requests. It also negatively impacts performance by preventing a congestion avoidance technique called TCP Slow-start.

Overhead of sending messages

HTTP/1.1 sends header data in text format. These headers had to be sent with every request, even though they often contained repetitive information.

Lack of server push

In HTTP/1.1, there is no way for the server to spontaneously send data to the client unless the client explicitly requests it. This can degrade performance in resource-demanding environments, such as web page loading.

2) HTTP/2

gRPC is a high-performance remote procedure call (RPC) framework built on top of HTTP/2. HTTP/2 provides a number of enhancements that allow for more efficient communication than the original HTTP/1.1. HTTP/2's features such as multiplexing, header compression, and server push are critical to the high performance of gRPC. Let's take a look at each of these powerful features of HTTP/2.

Multiplexing

HTTP/2's multiplexing feature allows multiple messages to be sent and received simultaneously over a single TCP connection, which solves the “head-of-line blocking” problem that occurs in HTTP/1.1. In HTTP/1.1, only one request and response could be processed at a time, forcing the next request to wait for the previous one to complete. With HTTP/2, multiple requests and responses can be processed simultaneously over multiple streams, which speeds up the loading time of web pages and improves overall performance.

Header compression

HTTP/2 increases network efficiency through header compression. Whereas HTTP/1.1 required duplicate header information to be sent with every request and response, HTTP/2 uses the HPACK compression format to compress these headers. This is especially important in mobile environments, where reducing header size can save data usage and speed up communication.

Server Push

The server push feature allows a server to send needed resources to a client in advance, even before the client requests them. For example, when a web page is requested, the server can proactively send the necessary CSS files or JavaScript files to the client along with the HTML document. This helps reduce the loading time of your web pages and improve the user experience because the client doesn't have to make additional requests.

4. gRPC Pros and Cons - When should I use gRPC?

1) Pros of gRPC

High performance: gRPC is built on top of HTTP/2 for high performance with features like multiplexing, header compression, and server push. This enables low latency and high throughput.

Multi-language support: gRPC supports a wide range of programming languages. This allows for seamless interoperation between services written in different languages.

Bi-directional streaming: gRPC supports bi-directional streaming between client and server, allowing for real-time data communication. This is useful in chat applications, real-time data feeds, and more.

Automatic code generation: gRPC uses protocol buffers to automatically generate client and server code from interface definition files. This can increase development productivity and reduce errors.

Security: gRPC encrypts communication by default over TLS for added security.

2) Cons of gRPC

Learning curve: Developers new to gRPC and protocol buffers may have a learning curve, especially if they are used to RESTful APIs based on HTTP/1.1.

Limited browser support: gRPC is currently not directly supported by most browsers. To communicate with web browsers, you need to use a separate protocol called gRPC-Web.

Debugging difficulties: Because it uses protocol buffers in binary format, it can be harder to debug than text-based JSON or XML.

Configuration complexity: Initial setup can be complex, including setting up HTTP/2 and TLS. This can be overkill, especially for small projects or simple services.

3) When should I use gRPC?

When you need high performance: For systems that require low latency and high throughput, gRPC is a great fit. For example, it is advantageous for real-time communication, streaming data processing, and high-frequency communication between microservices.

When you need interoperability between different languages: gRPC is a good choice when your services are written in multiple programming languages and you need seamless communication between them.

You need bi-directional streaming: gRPC works well for applications that require real-time, bi-directional communication between client and server.

When strict interface definition is required: gRPC's interface definition with protocol buffers is useful in systems that require clear contracts between services.

When security is important: gRPC encrypts communications by default using TLS, making it a good choice for security-critical applications.

5. Create a gRPC service with Python

1) Installation, Creation of Protocol Buffer

Install gRPC & protocol buffer compiler

pip install grpcio

pip install grpcio-toolsLet’s create protocal buffer called

helloworld.proto.

syntax = "proto3";

package helloworld;

// The greeting service definition.

service Greeter {

// Sends a greeting

rpc SayHello (HelloRequest) returns (HelloReply) {}

}

// The request message containing the user's name.

message HelloRequest {

string name = 1;

}

// The response message containing the greetings

message HelloReply {

string message = 1;

}2) Generate the server and client code

Generate the server and client code from the .proto file you created.

python -m grpc_tools.protoc -I. --python_out=. --grpc_python_out=. helloworld.proto3) Implement the server

Implement the gRPC server based on the generated _pb2.py and _pb2_grpc.py files.

from concurrent import futures

import grpc

import helloworld_pb2

import helloworld_pb2_grpc

class GreeterServicer(helloworld_pb2_grpc.GreeterServicer):

def SayHello(self, request, context):

return helloworld_pb2.HelloReply(message='Hello, %s!' % request.name)

def serve():

server = grpc.server(futures.ThreadPoolExecutor(max_workers=10))

helloworld_pb2_grpc.add_GreeterServicer_to_server(GreeterServicer(), server)

server.add_insecure_port('[::]:50051')

server.start()

server.wait_for_termination()

if __name__ == '__main__':

serve()4) Implement the client

import grpc

import helloworld_pb2

import helloworld_pb2_grpc

def run():

with grpc.insecure_channel('localhost:50051') as channel:

stub = helloworld_pb2_grpc.GreeterStub(channel)

response = stub.SayHello(helloworld_pb2.HelloRequest(name='you'))

print("Greeter client received: " + response.message)

if __name__ == '__main__':

run()You have now completed setting up the gRPC environment and creating your first service. If you run the server and send a request through the client, you'll get a “Hello, you!” response.

However, if you take a closer look at the code, one question arises. We never defined the GreeterServicer separately in the .proto, so how did it show up in the server code? What else is a stub in the client code? Let's talk about that.

6. gRPC in detail - Servicer and Stub

1) Defining a service in the protocol buffer

Service definition is done in the .proto file. Within this file, the developer defines the service name, methods, request messages, response messages, etc. Each method has one request type and one response type, which are defined according to the proto3 syntax. With these definitions, gRPC provides an RPC interface based on a strong type system.

2) What is a Servicer?

In gRPC, a Servicer is a class that handles requests from clients on the server side. This class implements the service interface defined in the .proto file. You define a service in a .proto file, and the protoc compiler automatically generates the service interface code based on it. This creates the Servicer class in the *_pb2_grpc.py file. The developer inherits this class, defines the methods that need to be implemented, and writes the actual logic to enable the gRPC server to process requests from clients.

3) What is a Stub?

On the client side, we use Stubs to call the methods defined on the server. A Stub is a proxy object that allows the client to call methods on the server as if they were local objects. The protoc compiler automatically generates client Stub code based on the service definition (defined within the *_pb2_grpc.py file). Client developers can use this Stub to call methods on the server, pass request messages, and receive responses.

7. gRPC's main features

1) Unary RPC

Unary RPC is the most basic communication pattern, where the client sends a single request to the server and the server returns a single response. This pattern is similar to the typical request-response model of web services. For example, if a client requests data, and the server finds and responds with that data, Unary RPC is used.

2) Server Streaming RPC

In Server Streaming RPC, the client sends a single request to the server, and the server sends multiple messages to the client in a stream. This pattern is useful when the server needs to send large amounts of data to the client in sequence. For example, a client requests a large amount of log data, and the server splits it up and sends it sequentially.

3) Client Streaming RPC

In Client Streaming RPC, the client sends a stream of messages to the server, and the server processes the stream and returns a final response to the client. This pattern is used when the client needs to send a large amount of data to the server, but a single response from the server is sufficient. For example, this is ideal for situations where a client uploads multiple image files, and the server processes them all and responds with a single message indicating success or failure.

4) Bidirectional Streaming RPC

Bidirectional Streaming RPC is the most flexible communication pattern that allows clients and servers to send streams of messages to each other simultaneously. In this approach, clients and servers can send and receive messages independently of each other, and these interactions can be synchronous or asynchronous. For example, in a live chat application, the client and server need to constantly send messages back and forth, in which case Bidirectional Streaming RPC is ideal.

8. gRPC and its competitors

In addition to gRPC, there are a number of other communication protocols and data exchange methods, each of which may be better suited for specific situations.

1) gRPC vs REST APIs

Advantages of gRPC

Performance: gRPC uses protocol buffers to send lightweight messages, making communication more efficient compared to REST APIs that use JSON/XML.

Language independent: Facilitates communication between servers and clients written in different languages.

Streaming support: Supports a variety of communication patterns, including bi-directional streaming.

Advantages of the REST API

Universal use: REST is widely used, so many developers are familiar with it and a wide variety of tools and libraries exist.

Uses HTTP: REST APIs use HTTP, making them fully compatible with web infrastructures.

Flexibility: Supports a variety of data formats (JSON, XML, etc.).

Selection criteria

If you need to transfer large amounts of data or have real-time communication that requires high performance, gRPC might be a good fit. On the other hand, if your API needs to be widely publicized and consumed by a wide variety of clients, REST may be a better fit.

2) gRPC vs GraphQL

Advantages of gRPC

Performance: Efficient data transfer through protocol buffers.

Language Independent: Supports many different programming languages.

Streaming: Supports bi-directional streaming.

Advantages of GraphQL

Data fetching: Allows clients to request the exact data they need.

Single Endpoint: All requests are processed through a single endpoint.

Type system:* Provides a robust type system to document and validate your API.

Selection criteria

If the client needs to request exactly the data it needs from the server, or if complex data queries are required, GraphQL may be a better fit. On the other hand, for services where high performance and real-time communication are important, gRPC might be a better choice.

3) gRPC vs Thrift

Advantages of gRPC

Performance: Efficient data transfer using protocol buffers.

Language Independent: Supports many programming languages.

Streaming: Supports bi-directional streaming.

Advantages of Thrift

Language support: Thrift supports more programming languages than gRPC.

Flexibility: Supports a variety of protocols (Binary, Compact, JSON, etc.) and transport mechanisms.

Selection Criteria

If support for multiple programming languages and protocol flexibility are important to you, you may want to consider Thrift. However, if you need high performance, efficient data transfer, and bi-directional streaming, gRPC may be a better fit.

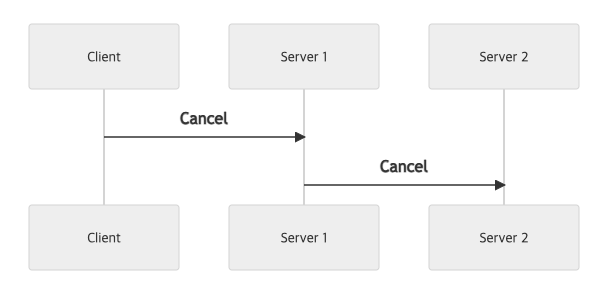

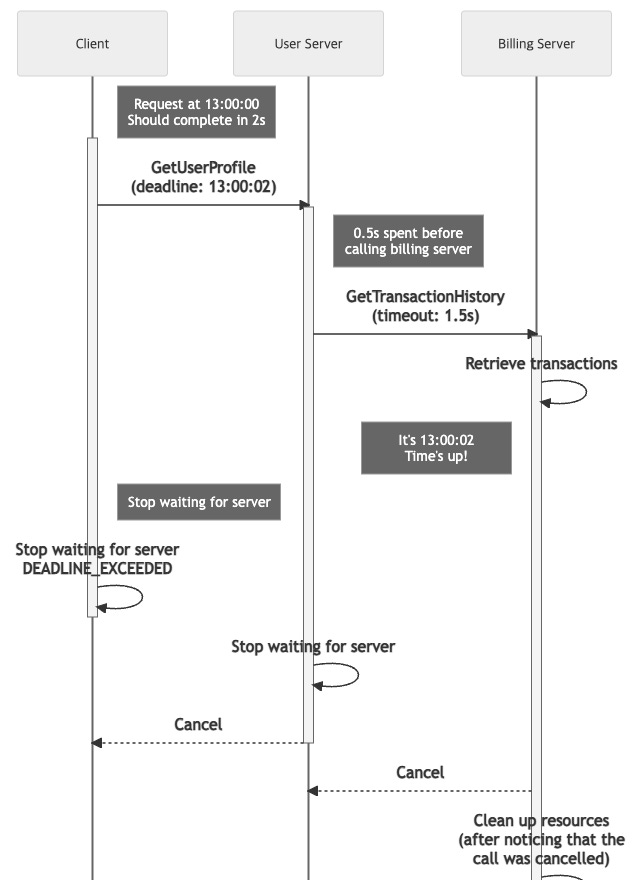

9. Cancellation and Deadlines

Cancellation and deadlines in gRPC play an important role in making communication between client and server more flexible and efficient. gRPC cancellation allows the client to abort a call when it determines that it no longer needs to wait for a response. This reduces unnecessary resource usage and allows the server to immediately stop working and process other requests. On the client side, the cancellation is done through Context, and the server must detect it and respond appropriately. This improves the overall performance and efficiency of the system, and enables more robust network communication.

On the other hand, gRPC deadline is the ability to set a maximum time for a request to complete. The client sets the deadline when making a call, and if the server doesn't respond within that time, the call is automatically canceled. This prevents long requests from bogging down the system and helps keep the service responsive. The server should be aware of the client's deadline and do its best to process the request within the given time. By setting a deadline, the system prevents overloading and ensures that requests are processed in a reasonable amount of time. These features play an important role in increasing the performance and reliability of applications that use gRPC.

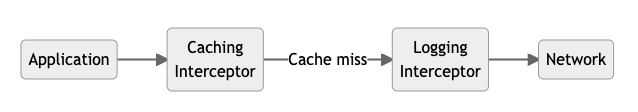

10. Interceptors

Interceptors in gRPC are a powerful feature that allow you to intercept calls between the client and server and execute additional logic. Interceptors are useful for increasing reusability of code by allowing common actions to be performed before and after a call, and for implementing features such as logging, authentication, and monitoring. Client interceptors can perform specific actions before sending a request or process it after receiving a response, while server interceptors can perform validation before processing a request or apply additional logic before sending a response. This can greatly improve the scalability and maintainability of your gRPC application.

For example, you can use the client interceptor to add a user authentication token to the request header before sending the request to the server. This way, you can centrally enforce authentication logic for all service calls.

def client_auth_interceptor(token):

def intercept_call(client_call_details, request_iterator, request_streaming, response_streaming):

metadata = []

if client_call_details.metadata is not None:

metadata = list(client_call_details.metadata)

metadata.append(('authorization', token))

client_call_details = _ClientCallDetails(

client_call_details.method, client_call_details.timeout, metadata,

client_call_details.credentials)

return client_call_details, request_iterator, None

return grpc.unary_unary_client_interceptor(intercept_call)11. Error Handling

Error handling in gRPC is critical to effectively handle exceptions that may occur during communication between the server and client. gRPC natively uses status codes and messages to represent errors. This allows the client to perform appropriate error handling based on the response it receives from the server.

There are a number of different status codes for gRPC errors, including UNKNOWN, INVALID_ARGUMENT, DEADLINE_EXCEEDED, and NOT_FOUND. Each error code indicates the type of error that occurred, which allows the client to determine the nature of the error and respond appropriately.

For example, if the server cannot find a specific resource, it might pass an error message to the client with the NOT_FOUND error code. The client can check this error code and display the appropriate message to the user.

from grpc import StatusCode

def get_resource(request, context):

resource = find_resource_by_id(request.id)

if resource is None:

context.abort(StatusCode.NOT_FOUND, "Requested resource not found")

return resourceOn the client side, you need to be able to handle errors received from the server. For example, when a StatusCode.NOT_FOUND error occurs, you can display a message to the user that the resource was not found.

from grpc import StatusCode

try:

response = stub.GetResource(request)

except grpc.RpcError as e:

if e.code() == StatusCode.NOT_FOUND:

print("Resource not found:", e.details())

else:

print("An error occurred:", e.details())